Lately more and more projects are looking at backing up object storage. When starting a backup project one of the first questions you need to answer is what is the size of the protected workloads and what is the daily change rate. If the total size is usually monitored using different tools or it can be extracted pretty easily, the daily change rate proves to be more challenging.

In this article, we’ll

guide you step-by-step through monitoring daily change rates in Azure Blob

Storage using diagnostic settings, the Log Analytics workspace, and Kusto Query

Language (KQL). If you’re not looking at backing up object storage, you can

still read the article since real time monitoring of object storage can be

applied in other situations.

Why Daily Change Rates?

The daily change rates of

the source object storage will directly impact required space on the backup

repository, and it will also have a direct influence on the sizing of

components such as proxies and cache repositories.

Why Log Analytics?

Log Analytics provides

near real time tracking of blob operations as it ingests Azure Monitor logs

(storage diagnostics logs), while KQL offers powerful filtering and aggregation

functionalities. It’s easy to setup and to extract data without external

processing tools. There is a downside, Log Analytics may incur additional costs

as detailed in here.

The proposed solution

is intended for a short period of time, such as 7 days, to extract the daily

change rates for the protected blobs.

Step 1: Configuring a Log Analytics

Workspace

Before you can send

diagnostic logs to Log Analytics, ensure that you have a Log Analytics

workspace set up:

·

In the

Azure Portal, navigate to Log Analytics workspaces using the global search bar.

·

Click +

Create to start a new workspace, or select an existing one if you already have

one in your desired subscription and region.

·

For a new

workspace, provide a Name, choose a Subscription, select a Resource Group (or

create one), and pick a Region that aligns with your storage account for

optimal performance.

·

Review the

settings and click Review + Create, then Create to provision the workspace.

Once your Log Analytics

workspace is ready, you can proceed with connecting your Storage account’s

diagnostic settings to this workspace.

For compliance meets,

performance and backup sizing needs, use regional workspaces to determine

requirements in each region.

Step 2: Enable Diagnostic Settings for

Azure Blob Storage

Azure Storage accounts

provide diagnostic logs that capture activity at both the account and blob

level, including read, write, and delete operations. To monitor daily change

rates, you need to ensure that diagnostic logging is enabled and that logs are routed

to a Log Analytics workspace.

Configuring Diagnostic Settings

·

Navigate to

your Azure Storage account in the Azure Portal.

·

In the left

pane, click on Monitoring > Diagnostic settings.

·

Click on

the blob

·

Click + Add

diagnostic setting.

·

Provide a

name for your setting (e.g., BlobActivityLogs).

·

Under Log,

select categories to monitor (Storage Write).

·

Choose Send

to Log Analytics workspace and select your target workspace.

·

Click Save

to apply your configuration.

Once enabled, the

diagnostic settings will begin sending blob activity logs to the designated Log

Analytics workspace.

Step 3: Understanding the Logged Data

After a short period

(5-10 minutes), data will start accumulating in your Log Analytics workspace.

The logs sent from Azure Blob Storage include a range of operation types, such

as PutBlob (create), SetBlobProperties (modify), among others.

Typically, these logs

land in the StorageBlobLogs table. Key columns to pay attention to include:

·

TimeGenerated:

The timestamp of the action.

·

OperationName:

The specific blob operation (e.g., PutBlob).

Step 4: Querying the Data with Kusto

Query Language (KQL)

KQL is a powerful query

language designed for ad-hoc analysis of structured, semi-structured, and

unstructured data. With KQL, you can aggregate and filter Blob Storage changes

to calculate daily change rates.

Here’s a basic KQL

query to get data for the last 7 days and present changes on a daily basis:

StorageBlobLogs

| where

TimeGenerated > ago(7d)

| where

OperationName in ("PutBlob", "PutBlock",

"PutBlockList", "SetBlobProperties",

"SetBlobMetadata")

| summarize

TotalChangedGB = sum(todouble(ContentLengthHeader)) / 1073741824

by bin(TimeGenerated, 1d)

| sort by

TimeGenerated asc

This query looks only

at new or modify operations:

- PutBlob: Uploads a new blob or overwrites an existing one.

- PutBlock: Uploads a block (part of a blob) — useful for tracking new

blob uploads in block blob scenarios.

- PutBlockList: Commits the uploaded blocks into a blob.

- SetBlobProperties: Updates system properties of a blob (e.g.,

content type, cache control). These are metadata-level changes, not

content changes.

- SetBlobMetadata: Updates user-defined metadata associated

with a blob. Again, this doesn’t alter the blob’s content but changes its

descriptive attributes.

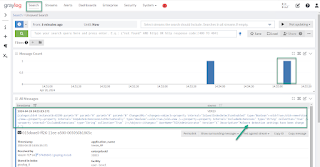

To run the query, go to

your Log Analytics workspace > Logs > New Query. Paste the query from

above, select the time range for the last 7 days, and press Run:

The output will give

you the changed size in GB and that you can use to estimate required resources

for blob storage backups. It shows only the last 3 days because the logs have

been sent only in the last 3 days to the workspace.

You can further expand

the way you are using the data by visualizing it in Azure Dashboards or

creating alerts. However for the purpose of deploying the backup infrastructure

advanced data manipulation is not needed.

Best Practices & Considerations

·

Retention:

Ensure your Log Analytics workspace retains data for as long as you need for

analysis, auditing, or compliance. Remember longer retention incurs larger

costs

·

Cost:

Frequent and verbose logging can incur costs. Scope logs and queries to only

what you require and check your costs.

·

Security:

Protect access to diagnostic logs as they may contain sensitive activity

information.

·

Implementation:

Start on per region basis. Select the most relevant storage accounts in that

region that could be used for a generalization.

Conclusion

Monitoring Azure Blob

daily change rates using diagnostic settings, Log Analytics, and KQL can be

easily setup and doesn’t require external processing of data although it may

incur additional costs. Other possibilities exist such as Blob Inventory, but

in this case additional data processing will be needed.